12 April 2010

Google uses site speed in rankings (since exactly when?)

Google announced last week that site speed is officially used in their search rankings. It’s only supposed to be one of “over 200 signals” that they use, and obviously not close to as important as links and keywords. The official blog post from google says, “We launched this change a few weeks back after rigorous testing.”

I wonder if they were experimenting with this even earlier… Back in October 2009, I noticed that my rankings on Google for this blog had dropped back two pages. I also discovered that I had configured some database settings wrong, which made page loads way slow (slower than Blizzard can release SCII, BAM!) When I fixed the db settings, load speed went back to normal and my rankings jumped back to their original spots.

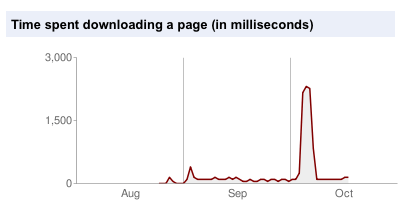

Of course this could all be a coincidence or maybe it was something related to me being in their sandbox, but check out this “Time spent downloading a page” graph from Google webmaster and see if you can guess when my rankings dropped and when they returned back to their original spots…

Comments (4)

1. Lars wrote:

interesting read, haven't heard about that before. i will have a closer look on the google-articles! thx!

Posted on 7 May 2010 at 5:05 PM | permalink

2. Devin Rhode wrote:

Would you have any idea how to query this site performance data? http://stackoverflow.com/questions/7541520/predict-time-to-fully-load-page-in-javascript

Posted on 25 September 2011 at 11:09 PM | permalink

3. peter wrote:

Hey Devin,

The graph from this post is from my google webmaster tools page - https://www.google.com/webmasters/tools/home, which only I can access. I don’t think google publicly releases that data for all websites (but I could be wrong).

I’m a little confused by what you’re asking in your StackOverflow post—if you’re doing this all in javascript, how are you getting the HTML content for another page (given the ajax and iframe security restrictions)? Likewise, the answer that suggests looking at the header of a request for size of things is an AJAX request and it requires that the thing being requested is on your domain. If these pages are already cached on your domain, then it'd probably be easier have a view that analyzes it server-side and sends the best result back to the client.

Or are you building this as some kind of browser extension that has more privileges? You could always load the cached page and the actual page in parallel, and if the actual page doesn't load after a given timeout, you show the cached page.

However, given all that, can you really not get away with just loading the actual page or having an entirely different solution (like how google pre-caches images of sites)? I don’t know what you’re working on, so maybe not… but maybe… :) Good luck.

Posted on 26 September 2011 at 10:09 AM | permalink

4. peter wrote:

Actually looks like they do expose site speeds here: http://pagespeed.googlelabs.com/pagespeed/

Posted on 29 September 2011 at 10:09 AM | permalink